Projects

Research projects on data-efficient foundation models, multimodal perception, and federated learning.

Data-Efficient Foundation Models & Multimodal Systems

I work on making large models more practical under real constraints:

- Foundation Models & Data Selection — select small, high-impact subsets for LLMs and vision models using training dynamics and attention structure.

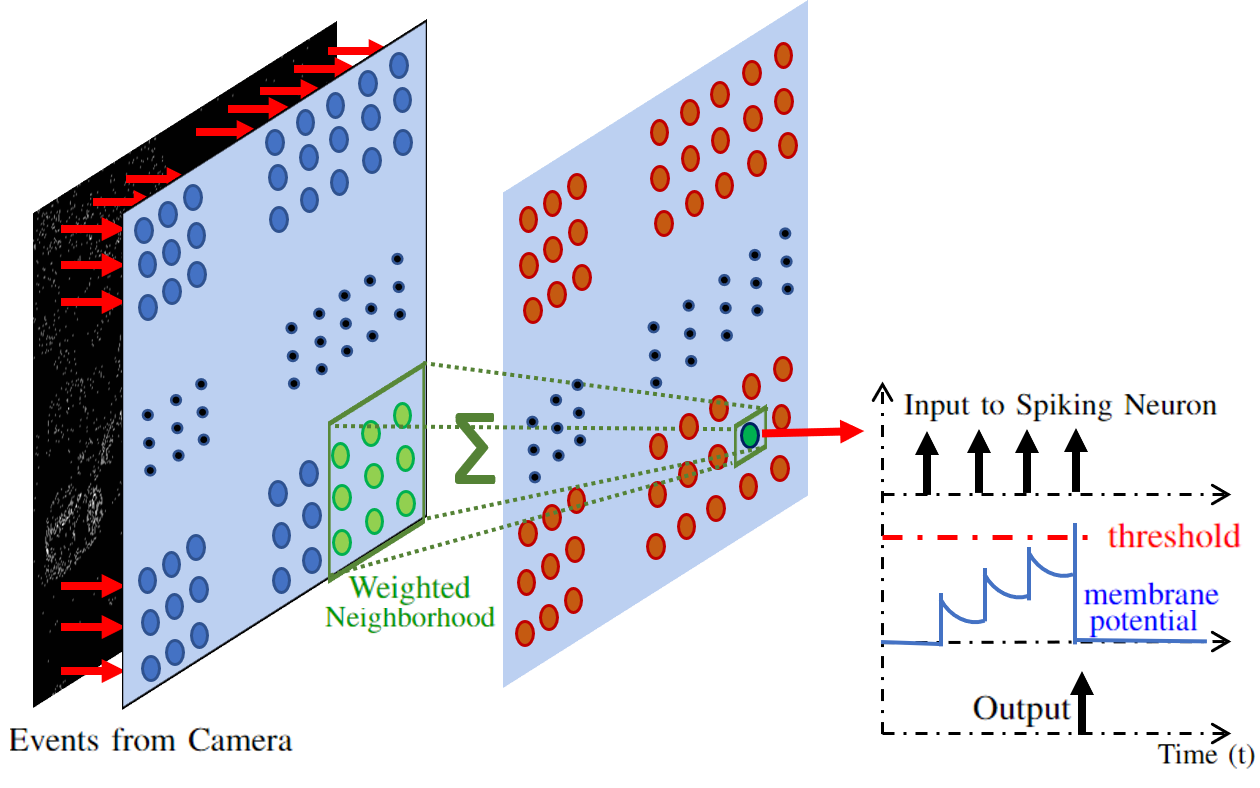

- Multimodal Perception & Robotics — build lightweight, low-latency perception systems using event-based sensing and efficient architectures.

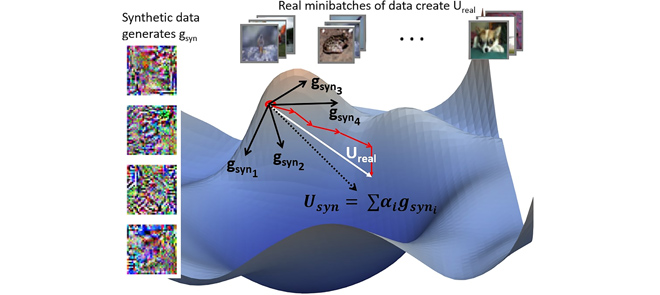

- Federated & Distributed Learning — reduce communication, preserve privacy, and keep performance competitive in real-world, heterogeneous settings.

Foundation Models & Data Selection

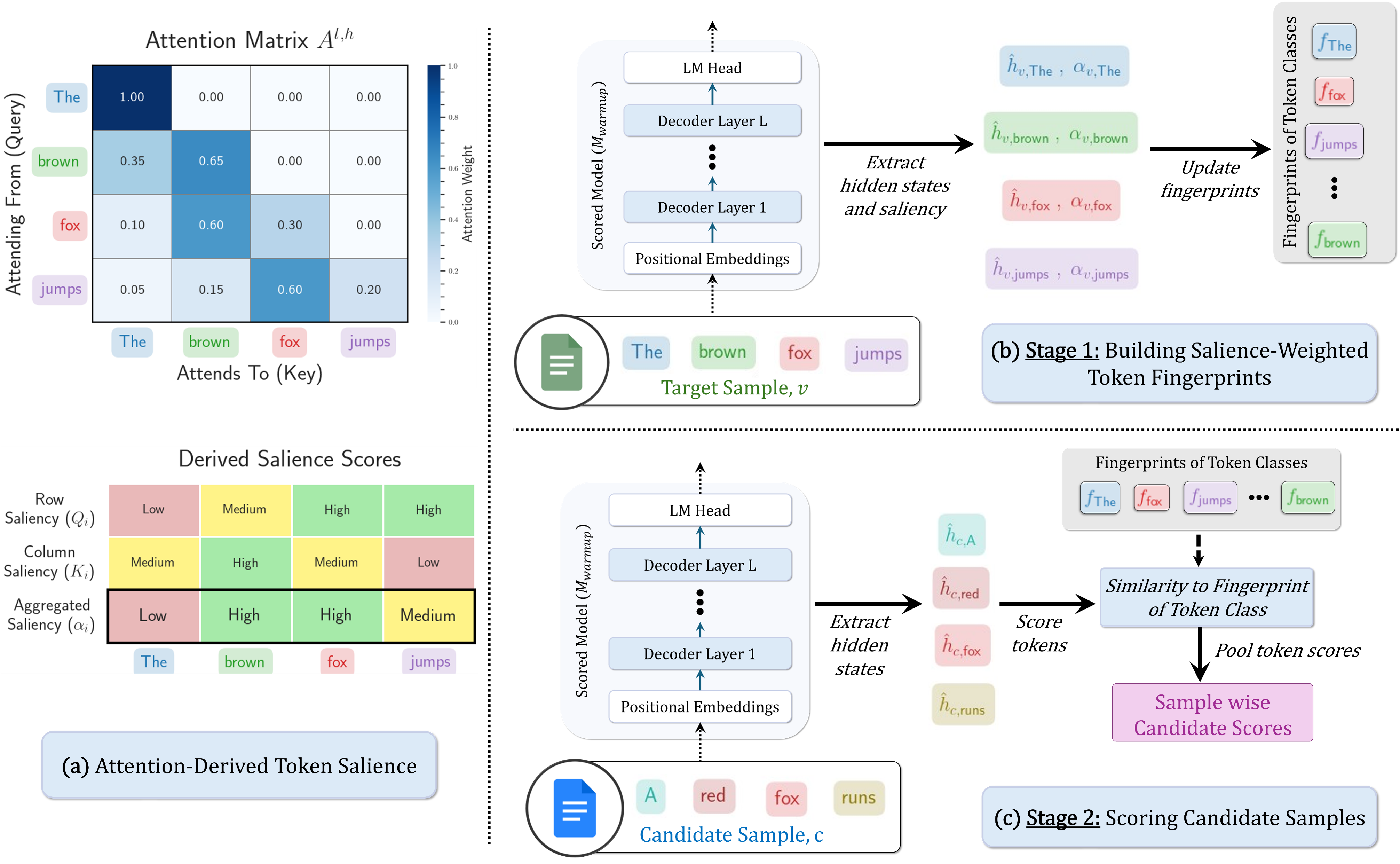

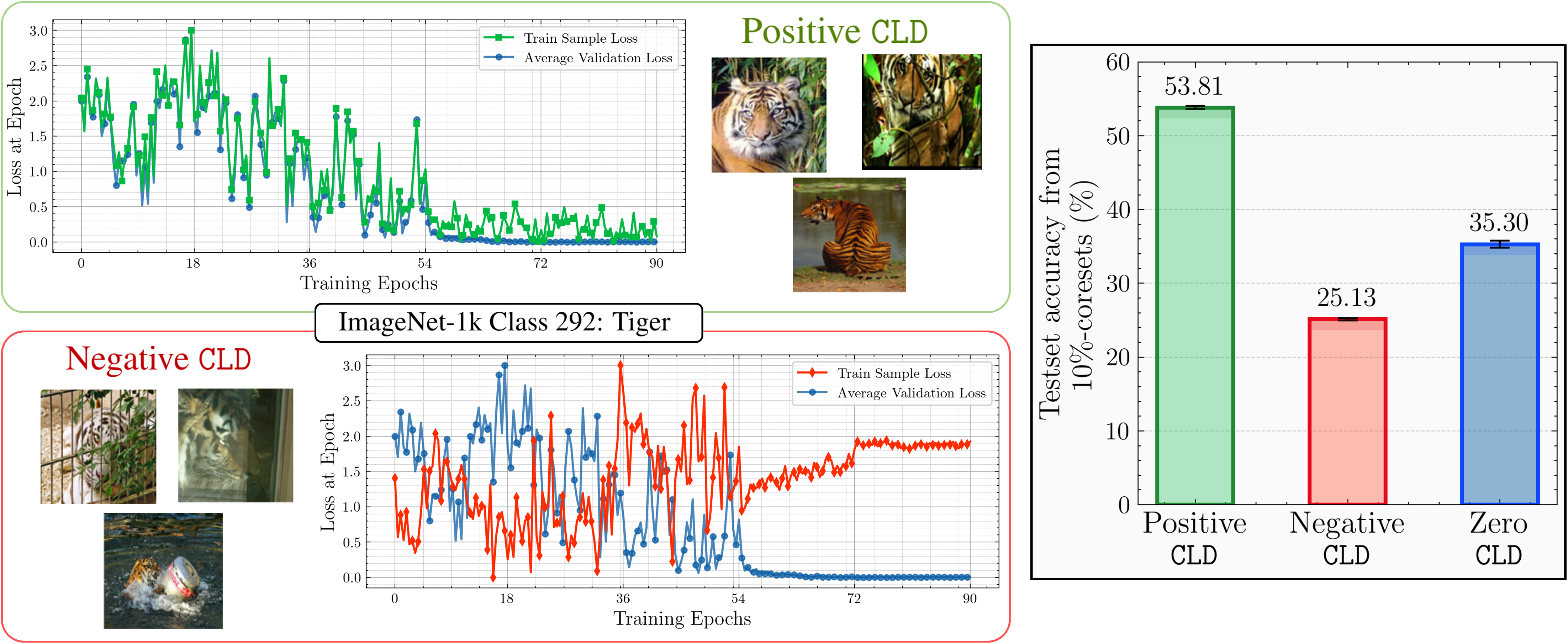

Select less, learn more: Develop methods like attention-based token saliency and loss-trajectory coresets to train LLMs and vision models efficiently without sacrificing accuracy.

Multimodal Perception & Robotics

Perceive faster, on cheaper hardware: Use event cameras and compact neural architectures to enable real-time detection and tracking for robotics and embedded platforms.

Federated & Distributed Learning

Learn collaboratively, communicate less: Build communication- and data-efficient federated learning methods that handle heterogeneous clients while preserving privacy and model quality.