DOTIE

Detecting Objects through Temporal Isolation of Events

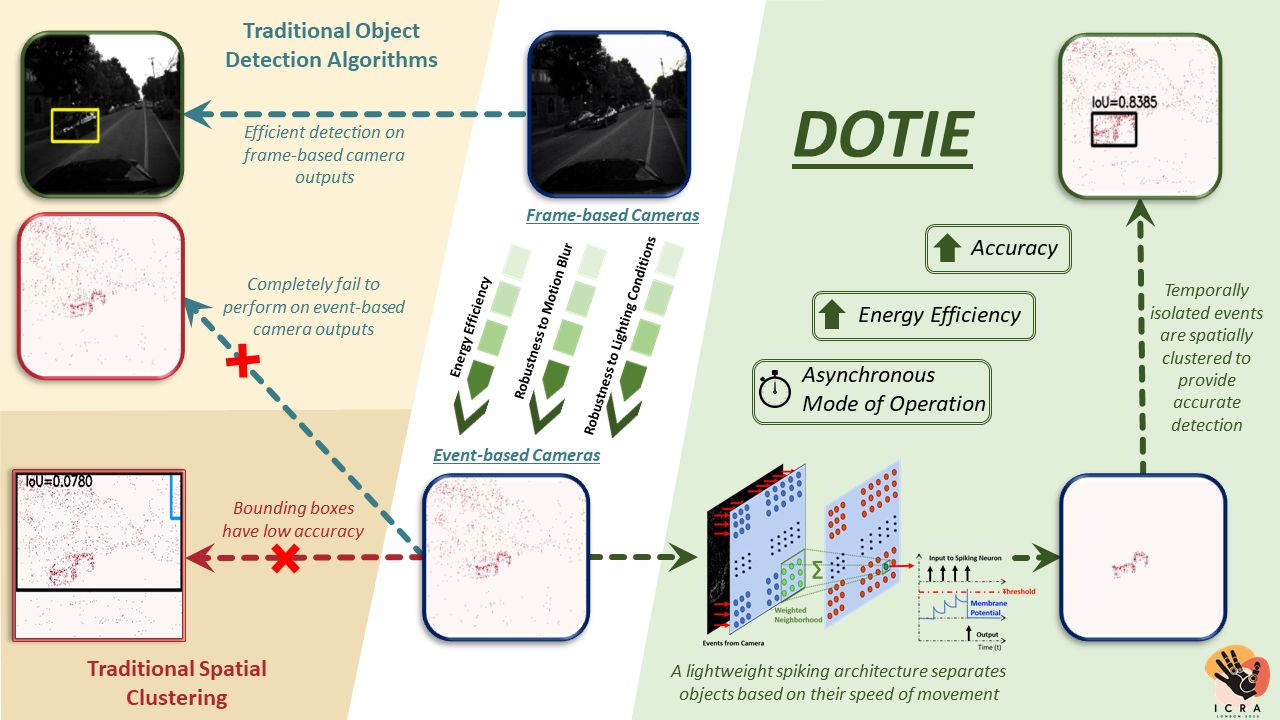

Motivation

Vision-based autonomous navigation systems necessitate fast and accurate object detection algorithms that are computationally efficient due to the limited energy resources of deployment hardware. Biologically inspired event cameras offer a promising solution as vision sensors due to their inherent advantages like high speed, energy efficiency, low latency, and robustness to varied lighting conditions. However, traditional computer vision algorithms often falter with event-based outputs because these outputs lack standard photometric features like light intensity and texture. This challenge motivates the exploration of temporal features inherently present in events, which are often overlooked by conventional algorithms. DOTIE (Detecting Objects through Temporal Isolation of Events) was developed to leverage this temporal information to efficiently detect moving objects using a lightweight spiking neural architecture.

Overview of Architecture

DOTIE employs a novel technique that capitalizes on the temporal information inherent in event data to detect moving objects. The core of DOTIE is a lightweight, single-layer spiking neural network (SNN) designed to separate events based on the speed of the objects that generated them.

The fundamental principles behind the architecture are:

- Events generated by the same object are temporally close to each other.

- Events generated by the same object are spatially close to each other.

The architecture utilizes Leaky Integrate and Fire (LIF) neurons, which are sensitive to the temporal structure of input events. By tuning hyperparameters like the threshold and leak factor, these neurons can identify input spikes (events) that occur close together in time, effectively filtering events based on object speed. Instead of connecting each pixel directly to a neuron, DOTIE uses a weighted sum from a neighborhood of pixels (e.g., 3x3) as input to each neuron. This allows the SNN to better identify fast-moving objects by considering the spatio-temporal pattern of events.

The key advantages of this architecture include:

- Asynchronous operation, processing events as they are generated.

- Robustness to camera noise and events from static background objects.

- Low latency and energy overhead due to the single-layer SNN and the nature of spiking neuron operations (accumulate vs. multiply-and-accumulate).

- Scene independence, meaning the SNN parameters correspond to object speeds and can be fine-tuned before deployment without extensive retraining for new scenes.

Results and Demonstration

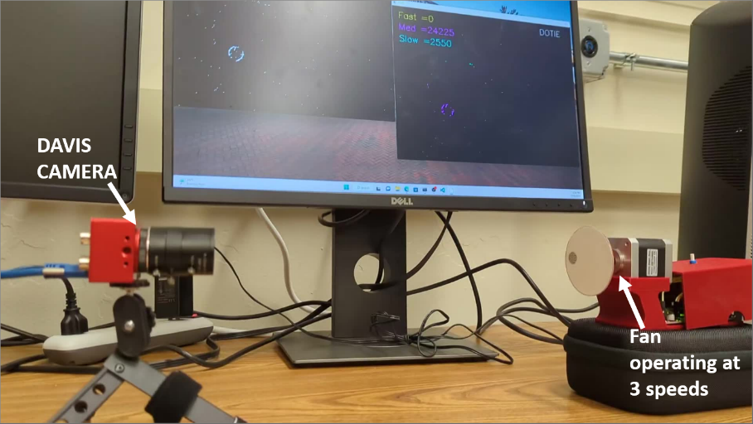

DOTIE has demonstrated its capability to effectively detect the speed of objects using only event data. In a practical demonstration mentioned in the literature, a DAVIS346 Event Camera was used to capture events from a disk rotating at three different speeds (slow, medium, and fast) on an electric motor. The spiking architecture was fine-tuned to separate events corresponding to these speeds, visually represented by color-coding events based on their allocated speed bin.

To further validate DOTIE’s real-time capabilities, a live demonstration was conducted using an Arduino board to control the rotation speed of a disk at three distinct levels. A DVS (Dynamic Vision Sensor) event camera captured the asynchronous events generated by the rotating disk, and DOTIE was applied in real-time to process this event stream. The results of this demonstration are visualized below, showcasing DOTIE’s ability to track the moving disk.

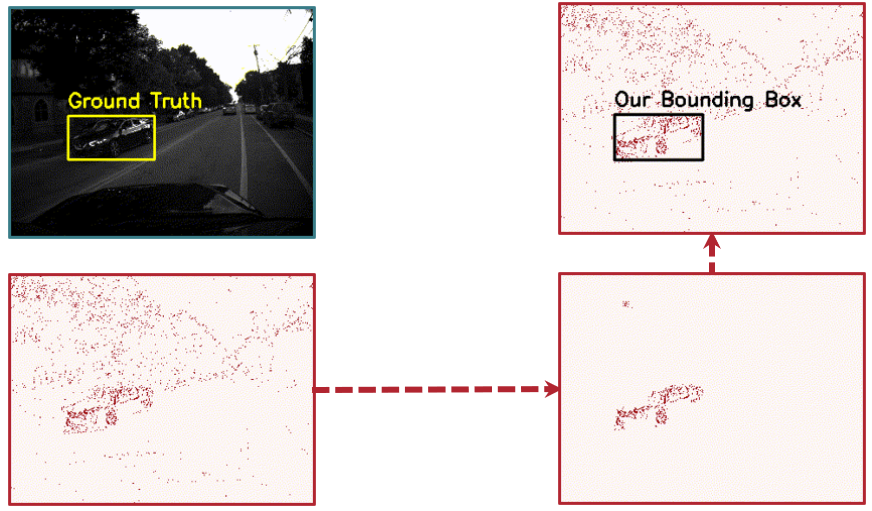

Accuracy: Compared to other event-based detection algorithms on the MVSEC dataset (outdoor day 2 segment), DOTIE (combined with DBSCAN for clustering) achieved significantly higher performance:

- Mean IoU: 0.8593

- Recall: 1.00

- Precision: 1.00 This outperforms methods like GMM, Meanshift, K-Means, GSCE, and DBSCAN alone, which struggled with background noise and accurately delineating moving objects.

Computational Efficiency: A key highlight of DOTIE is its energy efficiency, particularly when implemented on neuromorphic hardware.

- When implemented on Intel Loihi-2, DOTIE showed a dynamic energy consumption of 2.32 mJ per inference, compared to 28.74 mJ per inference on a CPU. This represents a 14x reduction in energy consumption.

- The estimated energy for the spiking layer during inference on the MVSEC outdoor day 2 segment was approximately 11.03nJ. This is a substantial reduction compared to traditional artificial neural networks like YOLOv3, which was estimated to consume around 44.06mJ for inference on the same dataset.

- While the CPU implementation achieved higher Frames Per Second (946 FPS) than Loihi-2 (266 FPS) in one reported experiment, the significant energy savings on neuromorphic hardware underscore its suitability for power-constrained applications.

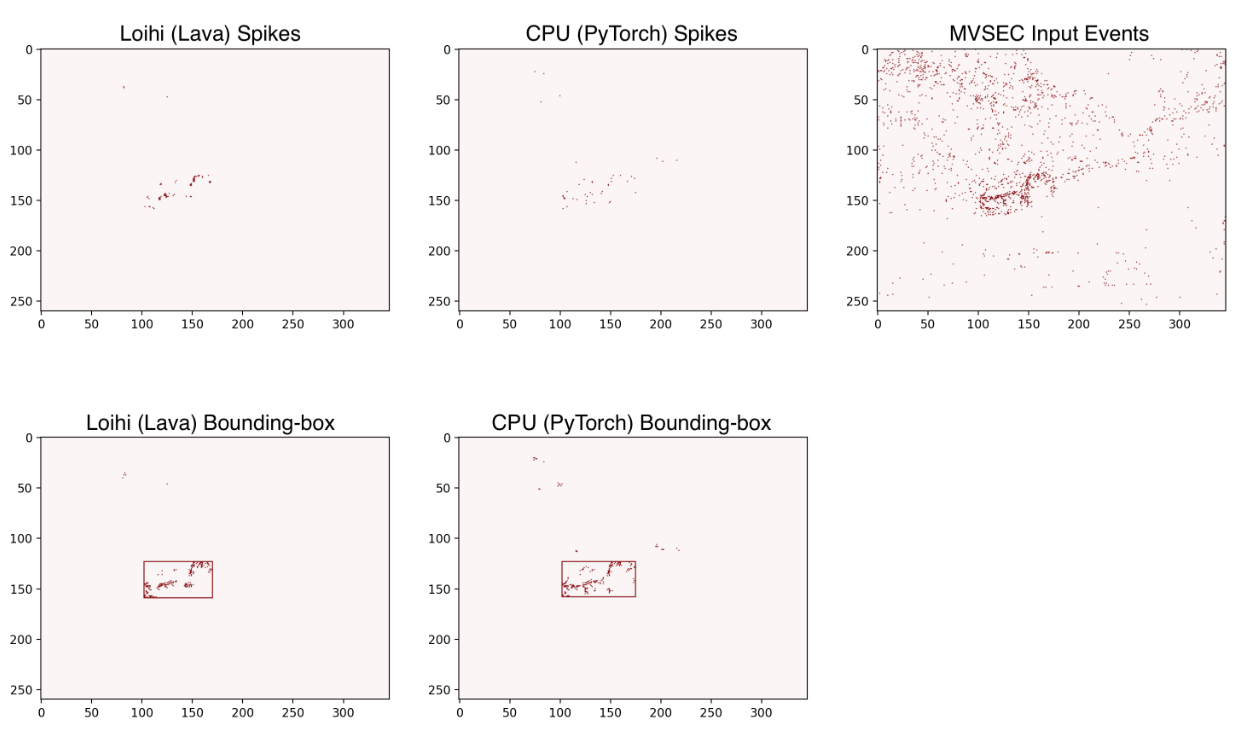

The algorithm is asynchronous and can operate at the rate events are generated, minimizing processing overhead in terms of latency. A bounding-box IoU score of roughly 80% was achieved between the Loihi (Lava simulator) and CPU (PyTorch) implementations on a subset of the MVSEC dataset.

This project showcases the advantages of using event cameras combined with spiking neural networks and neuromorphic hardware for efficient and low-latency object detection. You can find details in (Nagaraj et al., 2023) and (Roy et al., 2023).