Publications

Publications in reversed chronological order.

2025

-

TOFFE–Temporally-binned Object Flow from Events for High-speed and Energy-Efficient Object Detection and TrackingAdarsh Kumar Kosta, Amogh Joshi, Arjun Roy, Rohan Kumar Manna, Manish Nagaraj, and Kaushik RoyarXiv preprint arXiv:2501.12482, 2025

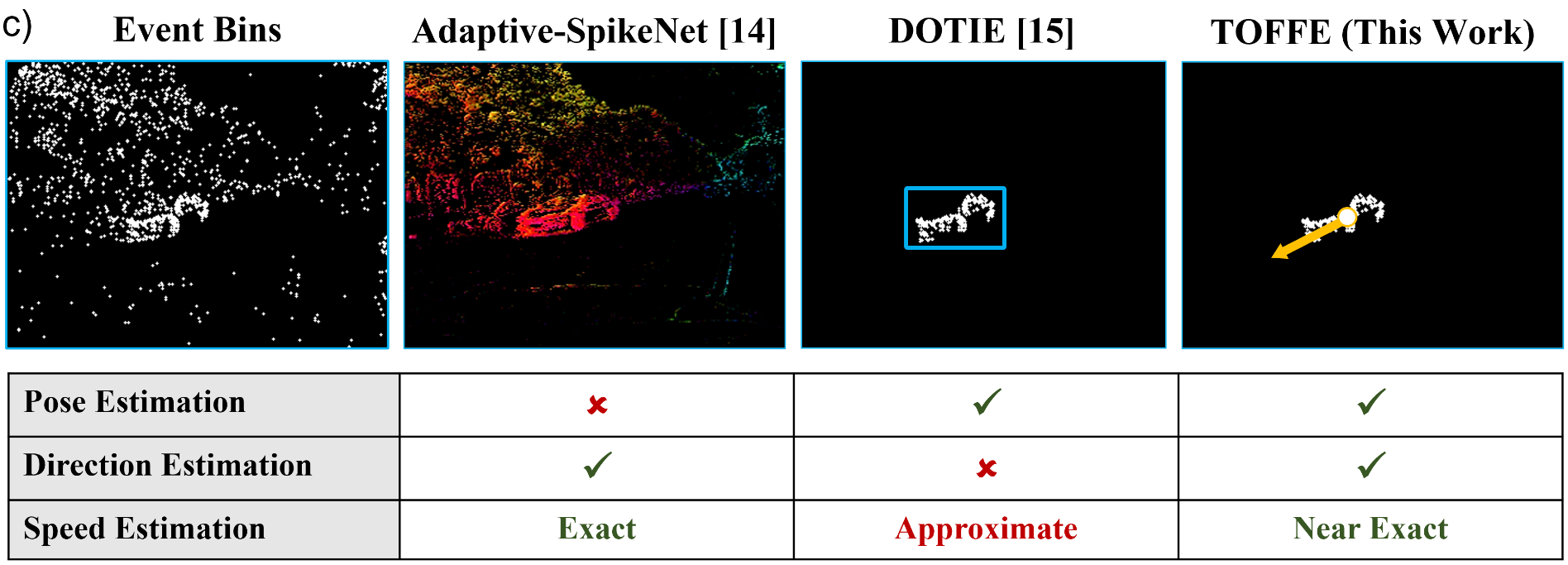

TOFFE–Temporally-binned Object Flow from Events for High-speed and Energy-Efficient Object Detection and TrackingAdarsh Kumar Kosta, Amogh Joshi, Arjun Roy, Rohan Kumar Manna, Manish Nagaraj, and Kaushik RoyarXiv preprint arXiv:2501.12482, 2025Object detection and tracking is an essential perception task for enabling fully autonomous navigation in robotic systems. Edge robot systems such as small drones need to execute complex maneuvers at high-speeds with limited resources, which places strict constraints on the underlying algorithms and hardware. Traditionally, frame-based cameras are used for vision-based perception due to their rich spatial information and simplified synchronous sensing capabilities. However, obtaining detailed information across frames incurs high energy consumption and may not even be required. In addition, their low temporal resolution renders them ineffective in high-speed motion scenarios. Event-based cameras offer a biologically-inspired solution to this by capturing only changes in intensity levels at exceptionally high temporal resolution and low power consumption, making them ideal for high-speed motion scenarios. However, their asynchronous and sparse outputs are not natively suitable with conventional deep learning methods. In this work, we propose TOFFE, a lightweight hybrid framework for performing event-based object motion estimation (including pose, direction, and speed estimation), referred to as Object Flow. TOFFE integrates bio-inspired Spiking Neural Networks (SNNs) and conventional Analog Neural Networks (ANNs), to efficiently process events at high temporal resolutions while being simple to train. Additionally, we present a novel event-based synthetic dataset involving high-speed object motion to train TOFFE. Our experimental results show that TOFFE achieves 5.7x/8.3x reduction in energy consumption and 4.6x/5.8x reduction in latency on edge GPU(Jetson TX2)/hybrid hardware(Loihi-2 and Jetson TX2), compared to previous event-based object detection baselines.

@article{kosta2025toffe, title = {TOFFE--Temporally-binned Object Flow from Events for High-speed and Energy-Efficient Object Detection and Tracking}, author = {Kosta, Adarsh Kumar and Joshi, Amogh and Roy, Arjun and Manna, Rohan Kumar and Nagaraj, Manish and Roy, Kaushik}, journal = {arXiv preprint arXiv:2501.12482}, year = {2025}, url = {https://arxiv.org/pdf/2501.12482}, doi = {10.48550/arXiv.2501.12482} } -

Energy-Efficient Autonomous Aerial Navigation with Dynamic Vision Sensors: A Physics-Guided Neuromorphic ApproachSourav Sanyal, Amogh Joshi, Manish Nagaraj, Rohan Kumar Manna, and Kaushik RoyarXiv preprint arXiv:2502.05938, 2025

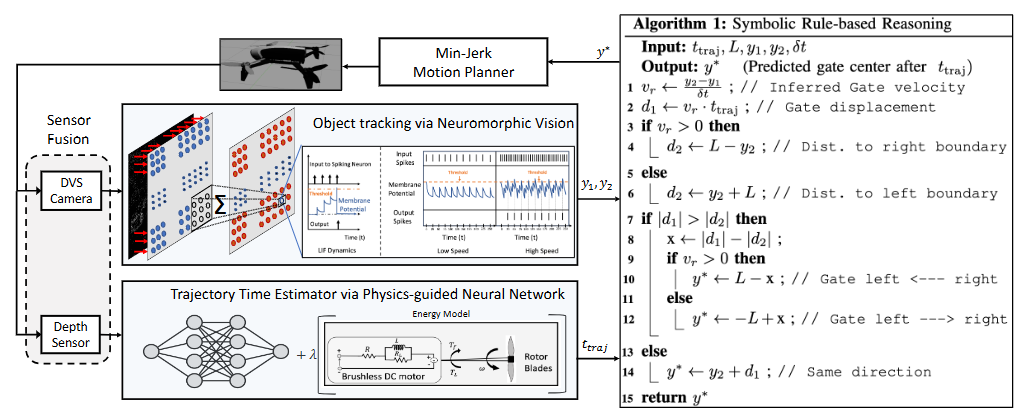

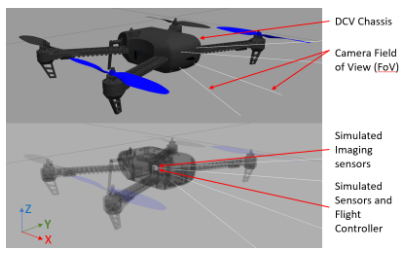

Energy-Efficient Autonomous Aerial Navigation with Dynamic Vision Sensors: A Physics-Guided Neuromorphic ApproachSourav Sanyal, Amogh Joshi, Manish Nagaraj, Rohan Kumar Manna, and Kaushik RoyarXiv preprint arXiv:2502.05938, 2025Vision-based object tracking is a critical component for achieving autonomous aerial navigation, particularly for obstacle avoidance. Neuromorphic Dynamic Vision Sensors (DVS) or event cameras, inspired by biological vision, offer a promising alternative to conventional frame-based cameras. These cameras can detect changes in intensity asynchronously, even in challenging lighting conditions, with a high dynamic range and resistance to motion blur. Spiking neural networks (SNNs) are increasingly used to process these event-based signals efficiently and asynchronously. Meanwhile, physics-based artificial intelligence (AI) provides a means to incorporate system-level knowledge into neural networks via physical modeling. This enhances robustness, energy efficiency, and provides symbolic explainability. In this work, we present a neuromorphic navigation framework for autonomous drone navigation. The focus is on detecting and navigating through moving gates while avoiding collisions. We use event cameras for detecting moving objects through a shallow SNN architecture in an unsupervised manner. This is combined with a lightweight energy-aware physics-guided neural network (PgNN) trained with depth inputs to predict optimal flight times, generating near-minimum energy paths. The system is implemented in the Gazebo simulator and integrates a sensor-fused vision-to-planning neuro-symbolic framework built with the Robot Operating System (ROS) middleware. This work highlights the future potential of integrating event-based vision with physics-guided planning for energy-efficient autonomous navigation, particularly for low-latency decision-making.

@article{sanyal2025energy, title = {Energy-Efficient Autonomous Aerial Navigation with Dynamic Vision Sensors: A Physics-Guided Neuromorphic Approach}, author = {Sanyal, Sourav and Joshi, Amogh and Nagaraj, Manish and Manna, Rohan Kumar and Roy, Kaushik}, journal = {arXiv preprint arXiv:2502.05938}, year = {2025}, doi = {10.48550/arXiv.2502.05938} } -

Coresets from Trajectories: Selecting Data via Correlation of Loss DifferencesManish Nagaraj, Deepak Ravikumar, and Kaushik RoyTransactions on Machine Learning Research, 2025

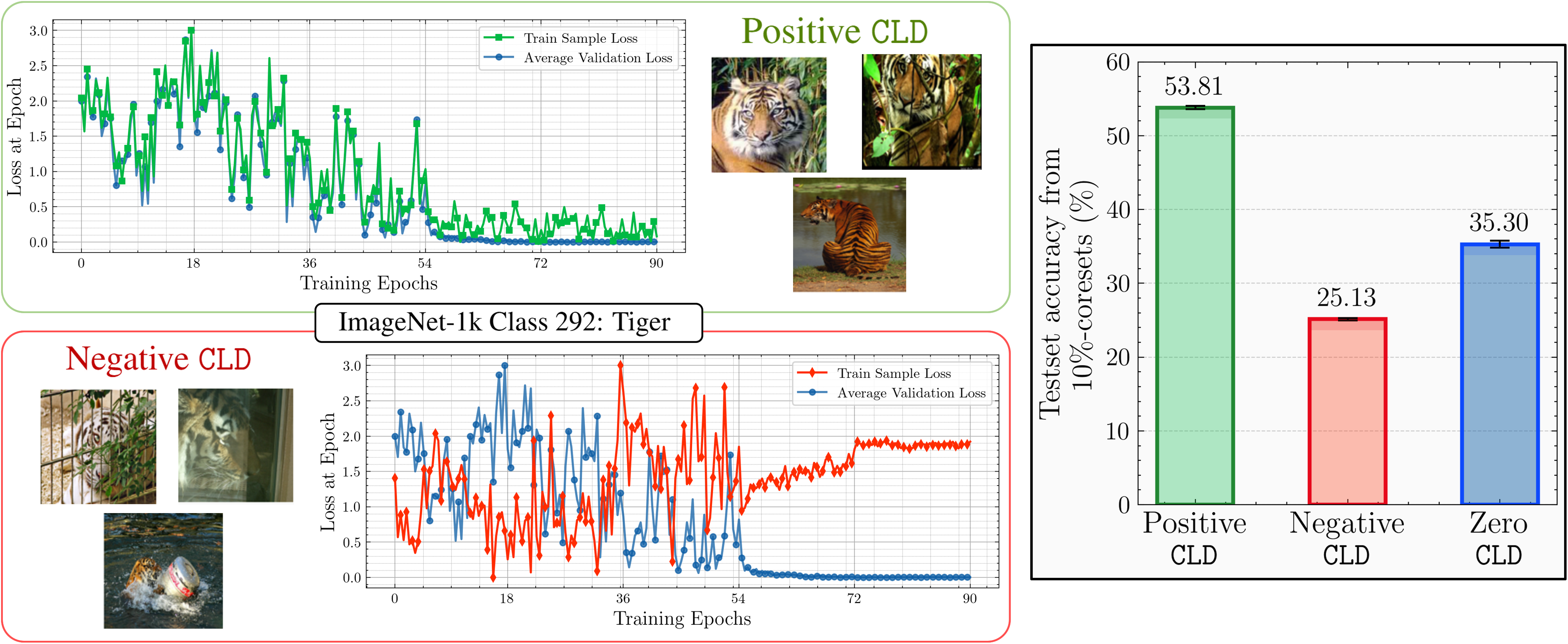

Coresets from Trajectories: Selecting Data via Correlation of Loss DifferencesManish Nagaraj, Deepak Ravikumar, and Kaushik RoyTransactions on Machine Learning Research, 2025Deep learning models achieve state-of-the-art performance across domains but face scalability challenges in real-time or resource-constrained scenarios. To address this, we proposeCorrelation of Loss Differences (CLD), a simple and scalable metric for coreset selection that identifies the most impactful training samples by measuring their alignment with the loss trajectories of a held-out validation set. CLD is highly efficient, requiring only persample loss values computed at training checkpoints, and avoiding the costly gradient and curvature computations used in many existing subset selection methods. We develop a general theoretical framework that establishes convergence guarantees for CLD-based coresets, demonstrating that the convergence error is upper-bounded by the alignment of the selected samples and the representativeness of the validation set. On CIFAR-100 and ImageNet-1k,CLD-based coresets typically outperform or closely match state-of-the-art methods across subset sizes, and remain within 1% of more computationally expensive baselines even when not leading. CLD transfers effectively across architectures (ResNet, VGG, DenseNet), enabling proxy-to-target selection with < 1% degradation. Moreover, CLD is stable when using only early checkpoints, incurring negligible accuracy loss. Finally, CLD exhibits inherent bias reduction via per-class validation alignment, obviating the need for additional stratified sampling. Together, these properties make CLD a principled, efficient, stable, and transferable tool for scalable dataset optimization.

@article{nagaraj2025coresets, title = {Coresets from Trajectories: Selecting Data via Correlation of Loss Differences}, author = {Nagaraj, Manish and Ravikumar, Deepak and Roy, Kaushik}, journal = {Transactions on Machine Learning Research}, issn = {2835-8856}, year = {2025}, url = {https://openreview.net/forum?id=QY0pbZTWJ9}, doi = {10.48550/arXiv.2508.20230}, } -

TRIM: Token-wise Attention-Derived Saliency for Data-Efficient Instruction TuningManish Nagaraj, Sakshi Choudhary, Utkarsh Saxena, Deepak Ravikumar, and Kaushik Roy2025

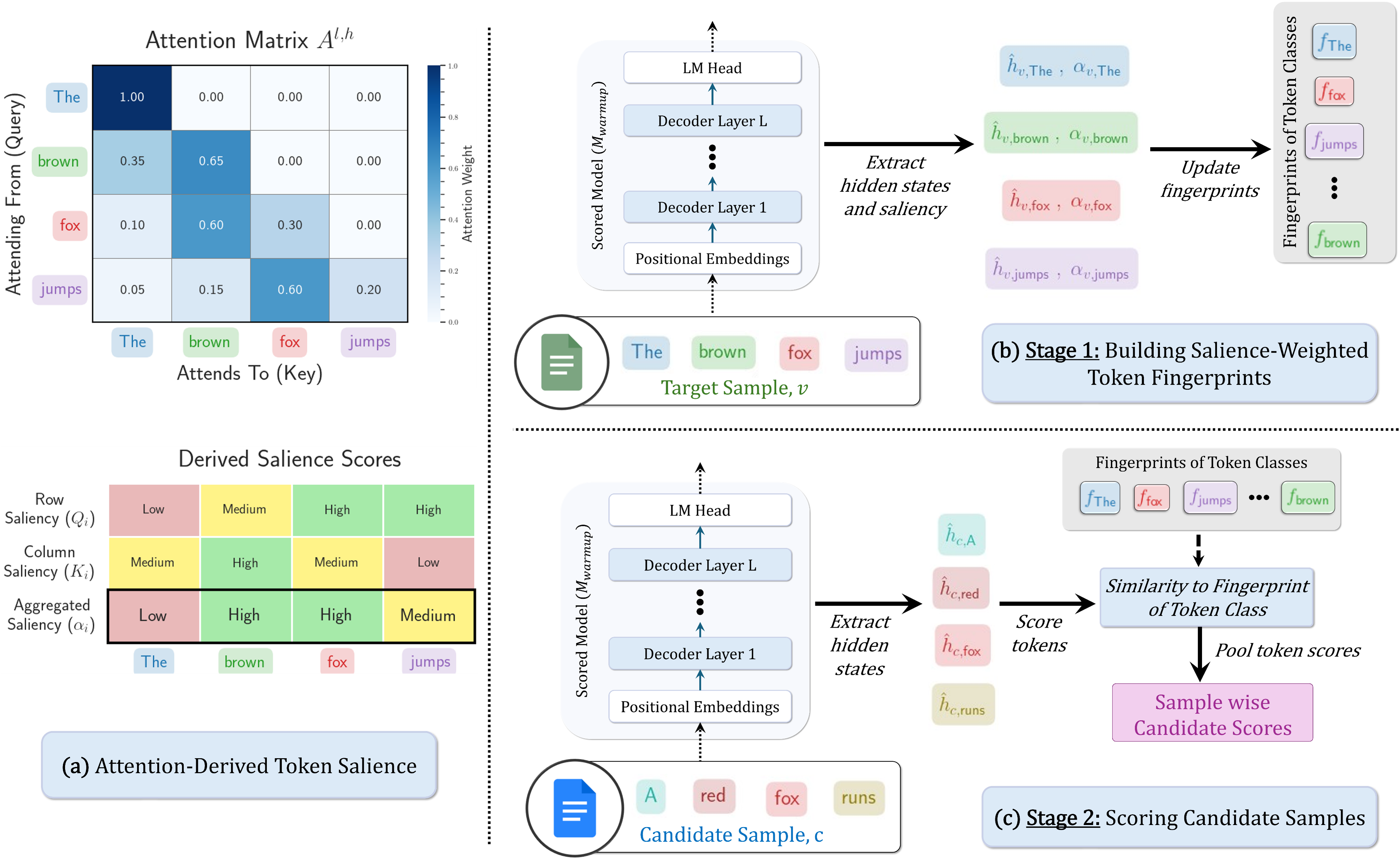

TRIM: Token-wise Attention-Derived Saliency for Data-Efficient Instruction TuningManish Nagaraj, Sakshi Choudhary, Utkarsh Saxena, Deepak Ravikumar, and Kaushik Roy2025Instruction tuning is essential for aligning large language models (LLMs) to downstream tasks and commonly relies on large, diverse corpora. However, small, high-quality subsets, known as coresets, can deliver comparable or superior results, though curating them remains challenging. Existing methods often rely on coarse, sample-level signals like gradients, an approach that is computationally expensive and overlooks fine-grained features. To address this, we introduce TRIM (Token Relevance via Interpretable Multi-layer Attention), a forward-only, token-centric framework. Instead of using gradients, TRIM operates by matching underlying representational patterns identified via attention-based "fingerprints" from a handful of target samples. Such an approach makes TRIM highly efficient and uniquely sensitive to the structural features that define a task. Coresets selected by our method consistently outperform state-of-the-art baselines by up to 9% on downstream tasks and even surpass the performance of full-data fine-tuning in some settings. By avoiding expensive backward passes, TRIM achieves this at a fraction of the computational cost. These findings establish TRIM as a scalable and efficient alternative for building high-quality instruction-tuning datasets.

@misc{nagaraj2025trim, title = {TRIM: Token-wise Attention-Derived Saliency for Data-Efficient Instruction Tuning}, author = {Nagaraj, Manish and Choudhary, Sakshi and Saxena, Utkarsh and Ravikumar, Deepak and Roy, Kaushik}, year = {2025}, eprint = {2510.07118}, archiveprefix = {arXiv}, primaryclass = {cs.CL}, url = {https://arxiv.org/abs/2510.07118}, doi = {10.48550/arXiv.2510.07118}, } - NeurIPS WorkshopUnifying Global Topology Manifolds and Local Persistent Homology for Data PruningArjun Roy, Prajna G. Malettira, Manish Nagaraj, and Kaushik RoyIn NeurIPS 2025 Workshop on Symmetry and Geometry in Neural Representations, 2025

2024

-

FEDORA: A Flying Event Dataset fOr Reactive behAviorAmogh Joshi, Wachirawit Ponghiran, Adarsh Kosta, Manish Nagaraj, and Kaushik RoyIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024

FEDORA: A Flying Event Dataset fOr Reactive behAviorAmogh Joshi, Wachirawit Ponghiran, Adarsh Kosta, Manish Nagaraj, and Kaushik RoyIn 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024The ability of resource-constrained biological systems such as fruitflies to perform complex and high-speed maneuvers in cluttered environments has been one of the prime sources of inspiration for developing vision-based autonomous systems. To emulate this capability, the perception pipeline of such systems must integrate information cues from tasks including optical flow and depth estimation, object detection and tracking, and segmentation, among others. However, the conventional approach of employing slow, synchronous inputs from standard frame-based cameras constrains these perception capabilities, particularly during high-speed maneuvers. Recently, event-based sensors have emerged as low latency and low energy alternatives to standard frame-based cameras for capturing high-speed motion, effectively speeding up perception and hence navigation. For coherence, all the perception tasks must be trained on the same input data. However, present-day datasets are curated mainly for a single or a handful of tasks and are limited in the rate of the provided ground truths. To address these limitations, we present Flying Event Dataset fOr Reactive behAviour (FEDORA) - a fully synthetic dataset for perception tasks, with raw data from frame-based cameras, event-based cameras, and Inertial Measurement Units (IMU), along with ground truths for depth, pose, and optical flow at a rate much higher than existing datasets.

@inproceedings{joshi2024fedora, title = {FEDORA: A Flying Event Dataset fOr Reactive behAvior}, author = {Joshi, Amogh and Ponghiran, Wachirawit and Kosta, Adarsh and Nagaraj, Manish and Roy, Kaushik}, booktitle = {2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, pages = {5859--5866}, year = {2024}, organization = {IEEE}, doi = {10.1109/IROS58592.2024.10801807}, url = {https://ieeexplore.ieee.org/document/10801807} } -

TOFU: Towards Obfuscated Federated Updates by Encoding Weight Updates into Gradients from Proxy DataManish Nagaraj, Isha Garg, and Kaushik RoyIEEE Access, 2024

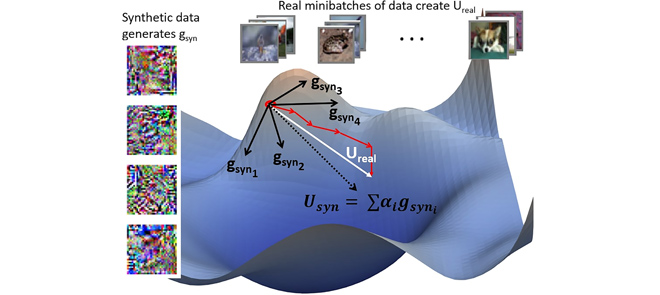

TOFU: Towards Obfuscated Federated Updates by Encoding Weight Updates into Gradients from Proxy DataManish Nagaraj, Isha Garg, and Kaushik RoyIEEE Access, 2024Advances in Federated Learning and an abundance of user data have enabled rich collaborative learning between multiple clients, without sharing user data. This is done via a central server that aggregates learning in the form of weight updates. However, this comes at the cost of repeated expensive communication between the clients and the server, and concerns about compromised user privacy. The inversion of gradients into the data that generated them is termed data leakage. Encryption techniques can be used to counter this leakage but at added expense. To address these challenges of communication efficiency and privacy, we propose TOFU, a novel algorithm that generates proxy data that encodes the weight updates for each client in its gradients. Instead of weight updates, this proxy data is now shared. Since input data is far lower in dimensional complexity than weights, this encoding allows us to send much lesser data per communication round. Additionally, the proxy data resembles noise and even perfect reconstruction from data leakage attacks would invert the decoded gradients into unrecognizable noise, enhancing privacy. We show that TOFU enables learning with less than 1% and 7% accuracy drops on MNIST and CIFAR-10 datasets, respectively. This drop can be recovered via a few rounds of expensive encrypted gradient exchange. This enables us to learn to near-full accuracy in a federated setup, while being 4x and 6.6x more communication efficient than the standard Federated Averaging algorithm on MNIST and CIFAR-10, respectively.

@article{nagaraj2024tofu, title = {TOFU: Towards Obfuscated Federated Updates by Encoding Weight Updates into Gradients from Proxy Data}, author = {Nagaraj, Manish and Garg, Isha and Roy, Kaushik}, journal = {IEEE Access}, year = {2024}, publisher = {IEEE}, doi = {10.1109/ACCESS.2024.3390716}, url = {https://ieeexplore.ieee.org/abstract/document/10504799}, } - DATEDriving Autonomy with Event-Based Cameras: Algorithm and Hardware PerspectivesNael Mizanur Rahman, Uday Kamal, Manish Nagaraj, Shaunak Roy, and Saibal MukhopadhyayIn 2024 Design, Automation & Test in Europe Conference & Exhibition (DATE), 2024

In high-speed robotics and autonomous vehicles, rapid environmental adaptation is necessary. Traditional cameras often face issues with motion blur and limited dynamic range. Event-based cameras address these by tracking pixel changes continuously and asynchronously, offering higher temporal resolution with minimal blur. In this work, we highlight our recent efforts in solving the challenge of processing event-camera data efficiently from both algorithm and hardware perspective. Specifically, we present how brain-inspired algorithms such as spiking neural networks (SNNs) can efficiently detect and track object motion from event-camera data. Next, we discuss how we can leverage associative memory structures for efficient event-based represen-tation learning. And finally, we show how our developed Application Specific Integrated Circuit (ASIC) architecture for low-latency, energy-efficient processing outperforms typical GPU/CPU solutions, thus enabling real-time event-based processing. With a 100x reduction in latency and a 1000x lower energy per event compared to state-of-the-art GPU/CPU setups, this enhances the front-end camera systems capability in autonomous vehicles to handle higher rates of event generation, improving control.

@inproceedings{rahman2024driving, title = {Driving Autonomy with Event-Based Cameras: Algorithm and Hardware Perspectives}, author = {Rahman, Nael Mizanur and Kamal, Uday and Nagaraj, Manish and Roy, Shaunak and Mukhopadhyay, Saibal}, booktitle = {2024 Design, Automation \& Test in Europe Conference \& Exhibition (DATE)}, pages = {1--6}, year = {2024}, organization = {IEEE}, doi = {10.23919/DATE58400.2024.10546715}, url = {https://ieeexplore.ieee.org/document/10546715} } -

Exploring Foveation and Saccade for Improved Weakly-Supervised LocalizationTimur Ibrayev, Manish Nagaraj, Amitangshu Mukherjee, and Kaushik RoyIn NeurIPS Gaze Meets Machine Learning Workshop, 2024

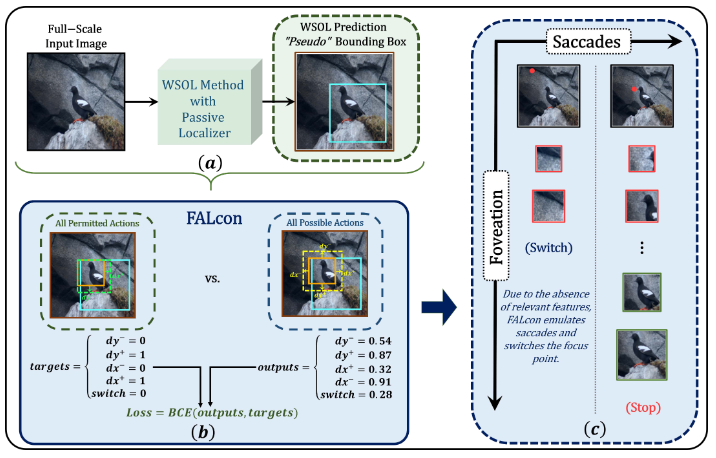

Exploring Foveation and Saccade for Improved Weakly-Supervised LocalizationTimur Ibrayev, Manish Nagaraj, Amitangshu Mukherjee, and Kaushik RoyIn NeurIPS Gaze Meets Machine Learning Workshop, 2024Deep neural networks have become the de facto choice as feature extraction engines, ubiquitously used for computer vision tasks. The current approach is to process every input with uniform resolution in a one-shot manner and make all of the predictions at once. However, human vision is an ?active? process that not only actively switches from one focus point to another within the visual field, but also applies spatially varying attention centered at such focus points. To bridge the gap, we propose incorporating the bio-plausible mechanisms of foveation and saccades to build an active object localization framework. While foveation enables it to process different regions of the input with variable degrees of detail, saccades allow it to change the focus point of such foveated regions. Our experiments show that these mechanisms improve the quality of predicted bounding boxes by capturing all the essential object parts while minimizing unnecessary background clutter. Additionally, they enable the resiliency of the method by allowing it to detect multiple objects while being trained only on data containing a single object per image. Finally, we explore the alignment of our method with human perception using the interesting "duck-rabbit" optical illusion.

@inproceedings{ibrayev2023exploring, title = {Exploring Foveation and Saccade for Improved Weakly-Supervised Localization}, author = {Ibrayev, Timur and Nagaraj, Manish and Mukherjee, Amitangshu and Roy, Kaushik}, booktitle = {NeurIPS Gaze Meets Machine Learning Workshop}, pages = {61--89}, year = {2024}, organization = {PMLR}, url = {https://proceedings.mlr.press/v226/ibrayev24a/ibrayev24a.pdf}, }

2023

-

Dotie-detecting objects through temporal isolation of events using a spiking architectureManish Nagaraj, Chamika Mihiranga Liyanagedera, and Kaushik RoyIn 2023 IEEE International Conference on Robotics and Automation (ICRA), 2023

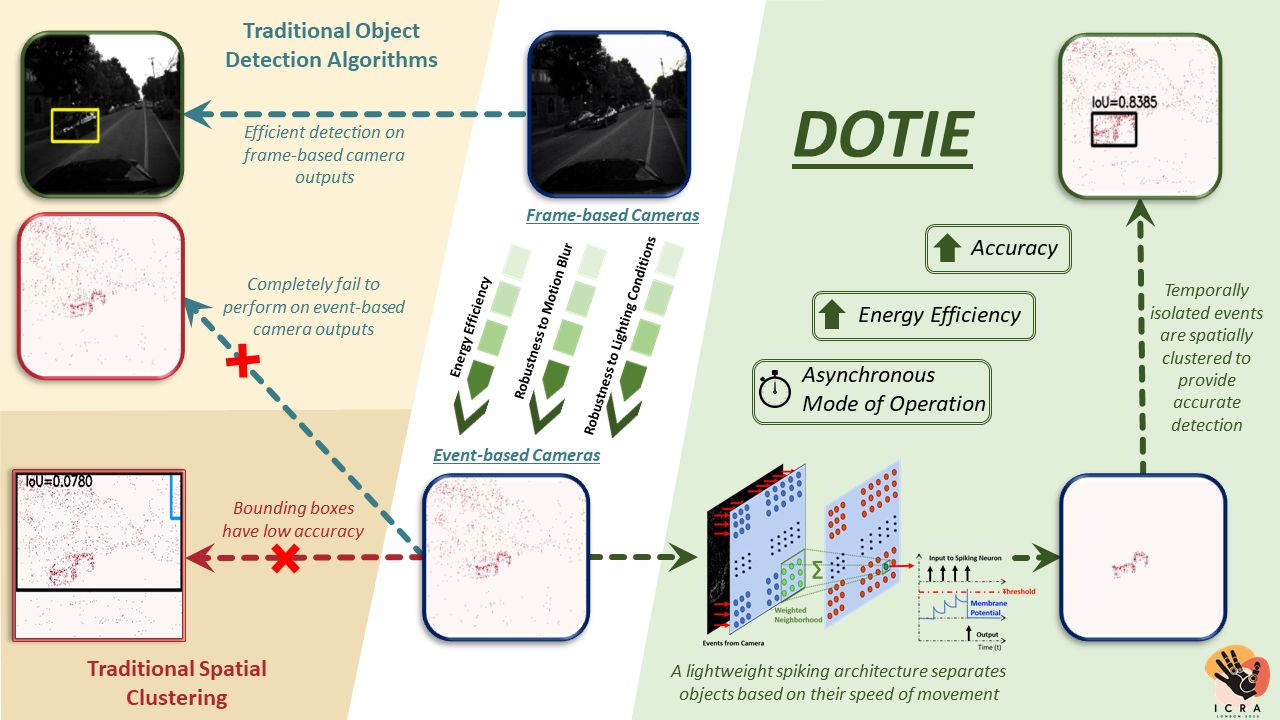

Dotie-detecting objects through temporal isolation of events using a spiking architectureManish Nagaraj, Chamika Mihiranga Liyanagedera, and Kaushik RoyIn 2023 IEEE International Conference on Robotics and Automation (ICRA), 2023Vision-based autonomous navigation systems rely on fast and accurate object detection algorithms to avoid obstacles. Algorithms and sensors designed for such systems need to be computationally efficient, due to the limited energy of the hardware used for deployment. Biologically inspired event cameras are a good candidate as a vision sensor for such systems due to their speed, energy efficiency, and robustness to varying lighting conditions. However, traditional computer vision algorithms fail to work on event-based outputs, as they lack photometric features such as light intensity and texture. In this work, we propose a novel technique that utilizes the temporal information inherently present in the events to efficiently detect moving objects. Our technique consists of a lightweight spiking neural architecture that is able to separate events based on the speed of the corresponding objects. These separated events are then further grouped spatially to determine object boundaries. This method of object detection is both asynchronous and robust to camera noise. In addition, it shows good performance in scenarios with events generated by static objects in the background, where existing event-based algorithms fail. We show that by utilizing our architecture, autonomous navigation systems can have minimal latency and energy overheads for performing object detection.

- MiddlewareEESMR: Energy Efficient BFT—SMR for the massesAdithya Bhat, Akhil Bandarupalli, Manish Nagaraj, Saurabh Bagchi, Aniket Kate, and Michael K ReiterIn Proceedings of the 24th International Middleware Conference, 2023

Modern Byzantine Fault-Tolerant State Machine Replication (BFT-SMR) solutions focus on reducing communication complexity, improving throughput, or lowering latency. This work explores the energy efficiency of BFT-SMR protocols. First, we propose a novel SMR protocol that optimizes for the steady state, i.e., when the leader is correct. This is done by reducing the number of required signatures per consensus unit and the communication complexity by order of the number of nodes n compared to the state-of-the-art BFT-SMR solutions. Concretely, we employ the idea that a quorum (collection) of signatures on a proposed value is avoidable during the failure-free runs. Second, we model and analyze the energy efficiency of protocols and argue why the steady-state needs to be optimized. Third, we present an application in the cyber-physical system (CPS) setting, where we consider a partially connected system by optionally leveraging wireless multicasts among neighbors. We analytically determine the parameter ranges for when our proposed protocol offers better energy efficiency than communicating with a baseline protocol utilizing an external trusted node. We present a hypergraph-based network model and generalize previous fault tolerance results to the model. Finally, we demonstrate our approach’s practicality by analyzing our protocol’s energy efficiency through experiments on a CPS test bed. In particular, we observe as high as 64% energy savings when compared to the state-of-the-art SMR solution for n=10 settings using BLE.

@inproceedings{bhat2023eesmr, title = {EESMR: Energy Efficient BFT---SMR for the masses}, author = {Bhat, Adithya and Bandarupalli, Akhil and Nagaraj, Manish and Bagchi, Saurabh and Kate, Aniket and Reiter, Michael K}, booktitle = {Proceedings of the 24th International Middleware Conference}, pages = {1--14}, year = {2023}, url = {https://dl.acm.org/doi/10.1145/3590140.3592848}, doi = {10.1145/3590140.3592848} } -

Live demonstration: Real-time event-based speed detection using spiking neural networksArjun Roy, Manish Nagaraj, Chamika Mihiranga Liyanagedera, and Kaushik RoyIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR Workshops), 2023

Live demonstration: Real-time event-based speed detection using spiking neural networksArjun Roy, Manish Nagaraj, Chamika Mihiranga Liyanagedera, and Kaushik RoyIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR Workshops), 2023Event cameras are emerging as an ideal vision sensor for high-speed applications due to their low latency and power consumption. DOTIE, a recent work in literature, has proposed a method to detect objects through spatial and temporal isolation of events with a spiking neural network. In this work, we implement DOTIE to detect a disk moving in a circular motion and identify the speed of rotation. We further validate the claim that spiking architectures can efficiently handle events by implementing DOTIE on Intel Loihi, a neuromorphic hardware suitable for spiking neural networks, and reveal a 14× reduction in energy consumption compared to the CPU implementation of DOTIE.

-

Low-Power Real-Time Sequential Processing with Spiking Neural NetworksChamika Mihiranga Liyanagedera, Manish Nagaraj, Wachirawit Ponghiran, and Kaushik RoyIn 2023 IEEE International Symposium on Circuits and Systems (ISCAS), 2023

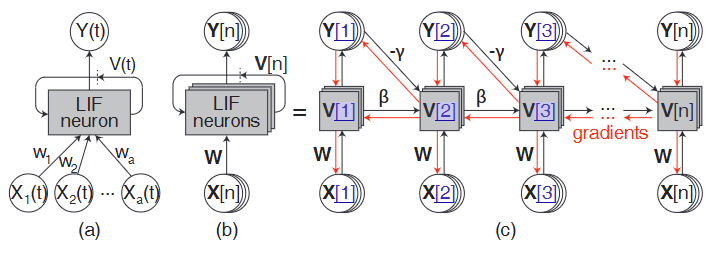

Low-Power Real-Time Sequential Processing with Spiking Neural NetworksChamika Mihiranga Liyanagedera, Manish Nagaraj, Wachirawit Ponghiran, and Kaushik RoyIn 2023 IEEE International Symposium on Circuits and Systems (ISCAS), 2023The biological brain is capable of processing temporal information at an incredible efficiency. Even with modern computing resources, traditional learning-based approaches are struggling to match its performance. Spiking neural networks that ?mimic? certain functionalities of the biological neural networks in the brain is a promising avenue for solving sequential learning problems with high computational efficiency. Nonetheless, training such networks still remains a challenging task as conventional learning rules are not directly applicable to these bio-inspired neural networks. Recent efforts have focused on novel training paradigms that allow spiking neural networks to learn temporal correlations between inputs and solve sequential tasks such as audio or video processing. Such success has fueled the development of event-driven neuromorphic hardware that is specifically optimized for energy-efficient implementation of spiking neural networks. This paper highlights the ongoing development of spiking neural networks for low-power real-time sequential processing and the potential to improve their training through an understanding of the information flow.

@inproceedings{liyanagedera2023low, title = {Low-Power Real-Time Sequential Processing with Spiking Neural Networks}, author = {Liyanagedera, Chamika Mihiranga and Nagaraj, Manish and Ponghiran, Wachirawit and Roy, Kaushik}, booktitle = {2023 IEEE International Symposium on Circuits and Systems (ISCAS)}, pages = {1--5}, year = {2023}, organization = {IEEE}, doi = {10.1109/ISCAS46773.2023.10181703}, url = {https://ieeexplore.ieee.org/document/10181703}, }

2019

- Panel PositionPanel 3 position paper: Blockchain can be the backbone of india?s economyManish Nagaraj and Somali ChaterjiIn 2019 11th International Conference on Communication Systems & Networks (COMSNETS), 2019

@inproceedings{nagaraj2019panel, title = {Panel 3 position paper: Blockchain can be the backbone of india?s economy}, author = {Nagaraj, Manish and Chaterji, Somali}, booktitle = {2019 11th International Conference on Communication Systems \& Networks (COMSNETS)}, pages = {523--526}, year = {2019}, organization = {IEEE}, doi = {10.1109/COMSNETS.2019.8711052} } - Master’s thesisEnergy Efficient Byzantine Agreement Protocols for Cyber Physical ResilienceManish NagarajPurdue University, 2019

@mastersthesis{nagaraj2019energy, title = {Energy Efficient Byzantine Agreement Protocols for Cyber Physical Resilience}, author = {Nagaraj, Manish}, year = {2019}, school = {Purdue University}, doi = {10.25394/PGS.8035430} }

2016

-

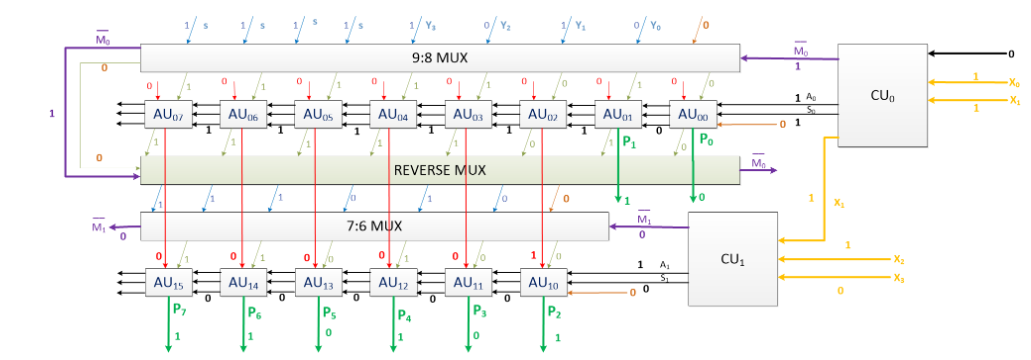

Reversible radix-4 booth multiplier for DSP applicationsAN Nagamani, R Nikhil, Manish Nagaraj, and Vinod Kumar AgrawalIn 2016 International Conference on Signal Processing and Communications (SPCOM), 2016

Reversible radix-4 booth multiplier for DSP applicationsAN Nagamani, R Nikhil, Manish Nagaraj, and Vinod Kumar AgrawalIn 2016 International Conference on Signal Processing and Communications (SPCOM), 2016Power dissipation has become the major concern for circuit design and implementation. Reversible Logic is the best alternative to Irreversible Logic in terms of low power consumption. Circuits designed using reversible logic have a wide array of applications. The Quantum Cost, Garbage Outputs, Ancillary Inputs and Delay are some of the parameters of reversible circuits that can be used to determine their efficiency and compare them with existing works. Optimization of these parameters are highly essential. Garbage Outputs is an important parameter that must be considered. This paper presents a design for a Reversible Radix-4 Booth Multiplier that is optimized in Garbage Cost and Ancillary inputs. The design proposed is capable of both signed and unsigned multiplication. The optimization in Garbage Cost ensures lower heat dissipation. The Encoded Booth Algorithm or Radix-4 Booth Algorithm reduces the number of partial products generated in signed multiplication to half the number generated using a Radix-2 signed multiplier making it suitable for Digital Signal Processors. The design proposed is compared to existing multiplier circuits and the parameters are tabulated.

@inproceedings{nagamani2016reversible, title = {Reversible radix-4 booth multiplier for DSP applications}, author = {Nagamani, AN and Nikhil, R and Nagaraj, Manish and Agrawal, Vinod Kumar}, booktitle = {2016 International Conference on Signal Processing and Communications (SPCOM)}, pages = {1--5}, year = {2016}, organization = {IEEE}, doi = {10.1109/SPCOM.2016.7746687} }